Deciding on DECIDE

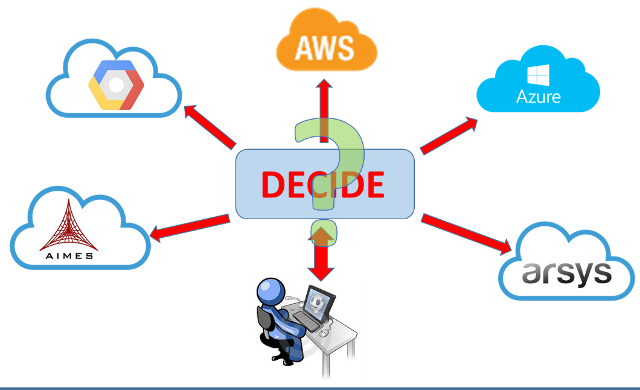

DECIDE project was conceived to provide, in a single integrated toolset, ‘enabling techniques and mechanisms to design develop and dynamically deploy multi cloud aware applications in an ecosystem of reliable, interoperable and legally compliant cloud services’.

There is a lot of both academic and industry talk on the subject of using multi cloud solutions to help solve the core problem for application developers and operators; put simply their challenge centres on delivering appropriate levels of security, scalability and resilience, whilst optimising return on Investment. Being able to divide operational elements of applications between public, private or hybrid cloud provision in order to take advantage of best pricing, communications or to meet legal requirements clearly offers advantages. However, the large-scale adoption of multi cloud is not proceeding at the same pace as the hype. There are some sound reasons for that; principally that it isn’t easy to achieve all those potential benefits and doing so requires some serious effort to ensure that the development and deployment of applications is not only the most operationally effective, but also the most cost effective and as future proof as possible. There are partial solutions available but the DECIDE project was conceived to provide, in a single integrated toolset, ‘enabling techniques and mechanisms to design develop and dynamically deploy multi cloud aware applications in an ecosystem of reliable, interoperable and legally compliant cloud services’. As the project enters its final phases and the individual tools are being integrated into a coherent service offering, all important user questions have come to the forefront of the DECIDE agenda. Does it work? Does it work better than what we do now? How easy is it learn and to use? Is it cost effective?

In order to help potential ‘service users’ (individuals and enterprisers who are the end users of the service offered) answer those questions the DECIDE project team are carrying out a series of evaluations as the DECIDE tool set evolves. From the very beginning of the DECIDE project, over two years ago, usability and user confidence have been key drivers and, as the DECIDE project enters its final phase; ensuring that is both effective and easy to use are at the forefront of partners minds. Meeting these twin objectives is key to any future commercial success and DECIDE must not only perform robustly, it must demonstrate to its potential client base that it is capable of making multi cloud selection, contracting, deployment and monitoring as efficient and painless as possible. At the project’s inception it was decided that the project consortium would include use case developers and owners, in order that the requirements of real-world applications could be used to evaluate the performance of the DECIDE tool suite at each stage of the tool development and influence the future direction of that development.

Using real world use cases for beta testing has the added ‘bonus’ of providing use case reviews which can be shared with other potential end users, helping them to decide (pun entirely intended) whether to make use the toolset.

In order to ensure that a broad spread of possible applications was used for the evaluation process, three different use cases from differing sectors have been adopted; a clinical data entry tool (health), data centre change tracking application (digital infrastructure), and an energy trading platform (commercial interactive). Moreover, use case owners also cover the range of possible development problems including; development of a new microservices application ‘from scratch’, redeveloping an existing monolithic application and migrating an application from a Windows environment to LINUX. Each use case has, therefore, differing requirements and these are reflected in the DECIDE tool elements and key results that use case owners will evaluate.

|

KR |

Evaluation dimension |

AIMES |

EXPERIS |

ARSYS |

|

KR1 (Multi-cloud native applications DevOps Framework) |

Usability, interoperability |

|

x |

|

|

KR2 (DECIDE ARCHITECT) |

Usability, reusability |

x |

x |

|

|

KR3 (DECIDE OPTIMUS) |

Efficiency, usability, flexibility/scalability, interoperability |

x |

|

|

|

KR4 (Advanced Cloud Service (meta-) Intermediator ACSmI) |

Availability, flexibility/scalability, interoperability, efficiency |

x |

|

x |

|

KR5 (DECIDE ADAPT) |

Availability, efficiency, testability |

x |

x |

x |

A robust evaluation process has been agreed between use case and tool developer partners following existing and recognised standards including ISO/IEC 25000 [i] and ISO/IEC 9126-4[ii] as well as the SUS [iii] usability scale. The areas addressed are:

●Availability: Availability [iv] is the ratio of time a system or component is functional to the total time it is required or expected to function. This can be expressed as a direct proportion (for example, 9/10 or 0.9) or as a percentage (for example, 90%).

●Efficiency: Efficiency is a measure of the extent to which input is well used for an intended task or function (output). It often specifically comprises the capability of a specific application of effort to produce a specific outcome with a minimum amount or quantity of waste, expense, or unnecessary effort.

● Usability: Usability is the ease of use and learnability of a human-made object. The object of use can be a software application, website, book, tool, machine, process, or anything a human interacts with.

● Flexibility: Software flexibility/scalability [v] can be defined as the ability of software to change easily in response to different user and system requirements.

● Interoperability: Interoperability is a property of a product or system, whose interfaces are completely understood, to work with other products or systems, present or future, without any restricted access or implementation.

● Reusability: The ability to reuse relies in an essential way on the ability to build larger things from smaller parts, and being able to identify commonalities among those parts. Reusability is often a required characteristic of platform software.

● Reliability: The term reliability refers to the ability of a computer-related hardware or software component to consistently perform according to its specifications. In theory, a reliable product is totally free of technical errors.

● Testability: Testability is the degree to which a software artefact (i.e. a software system, software module, requirements- or design document) supports testing in a given test.

An initial “quick and dirty” evaluation carried out on an early release of individual, non-integrated, DECIDE tools in late 2018, using immature versions of the use case applications showed that whilst, not unexpectedly, there was considerable work to do, the DECIDE elements showed promise. Fast forward to 2019 and two iterative evaluations will be completed, the first in February with feedback to the tool owners in March will cover the last non-integrated release of the DECIDE tools whilst the final evaluation will assess the full integrated DECIDE tool set. Results and conclusions will not only be fed back to tool developers, to inform technical improvements, but will also be used to inform the exploitation planning for the DECIDE suite and, of course, provide the basis for case studies which will assist future potential clients in the process of ‘deciding on DECIDE.

[i] ISO/IEC 25000 Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SquaRE) –Guide to SquaRE www.iso.org/standard/64764.html

[ii] ISO/IEC 9126-4:2004 Software Engineering—Product Quality—Quality in use metrics https://www.iso.org/standard/39752.html

[iii] J. Brooke, SUS. A quick and dirty usability scale, United Kingdom: Redhatch Consulting Ltd., 1986

[v] "(PDF) Measuring the Scalability of Cloud-based Software Services." 3 Jul. 2018, https://www.researchgate.net/publication/325323571_Measuring_the_Scalability_of_Cloud-based_Software_Services